Images and video are a great way to create visual experiences, but when dealing with large amounts, it can be difficult to manage without tools to provide things like searchability. To help, we can use the power of AI, to categorize and label our media as we import them into our project with tools like autotagging with Google Vision AI.

What is media tagging and categorization?

When working with media, in our context images, we need a way to be able to describe what’s in an image, especially if we’re trying to provide an easy-to-use experience around that media, such as searching or just simply browsing.

To do this, we can add metadata to our media, using keywords that describe what’s in that image, which is where our tags and categories come in.

The problem is having a human do that work doesn’t really scale. Maybe if you’re categorizing your personal image library, maybe it’s small enough to be manageable, but that’s not even likely the case anymore where smartphones dominate with amazing cameras.

So we need a way to do this work automatically in a way that can programmatically read our images and tell us what’s inside.

What is Google Vision?

Google’s Vision AI is a product and API that applies machine learning to the problem of categorizing images with a computer.

Based on huge sample datasets, the Vision API allows you to programmatically look at images and generate a list of labels for each image.

These labels in our case serve us well as tags or categories, that we can use in our apps as we’re trying to build a way for people to work through those huge amounts of images.

How does Google Vision work with Cloudinary?

Along with Cloudinary’s core product offerings is a marketplace of Add-ons that help extend that functionality.

Google Auto Tagging is one of those add-ons where by simply enabling it in our account, we can then tell Cloudinary that any time we upload an image, we want it to use that add-on to automatically tag our images.

For example, if I uploaded an image of a mountain, not only will I be able to programmatically see that it is indeed a mountain, but I can learn other things, such as if it has an ice cap or whether it appears to be winter or another season.

This makes for a nice and easy way to categorize our images anytime we add a new image to our library.

What are we going to build?

We’re going to spin up a Next.js app from a demo starter that has a simple upload widget that loads the image into the UI.

We’ll then take that image and learn how we can upload it to Cloudinary using Signed Uploads where we’ll configure our uploads to automatically tag our images with relevant labels.

The uploading will take place in a serverless function, as because it’s Signed, we’ll need to pass along our Cloudinary credentials, which we don’t want available inside of the clientside app.

To see a practical use case, we can then see how we can search for our tags and display them in the UI!

Disclaimer: I work for Cloudinary as a Developer Experience Engineer.

Step 0: Creating a new Next.js app from a demo starter

We’re going to start off with a new Next.js app using a starter that includes a simple upload form that reads the image and places it on the page.

Inside of your terminal, run:

yarn create next-app my-tagged-uploads -e https://github.com/colbyfayock/demo-image-upload-starter

# or

npx create-next-app my-tagged-uploads -e https://github.com/colbyfayock/demo-image-upload-starterNote: feel free to use a different value than

my-tagged-uploadas your project name!

Once installation has finished, you can navigate to that directory and start up your development server:

cd my-tagged-uploads

yarn dev

# or

npm run devAnd once loaded, you should now be able to open up your new app at http://localhost:3000!

You can even test this out by selecting an image and seeing it appear in the UI.

While nothing will happen and you can’t actually upload anything, it’s a good start for our walkthrough.

We’ll dive into some of the code making this work in the next steps, but to get an idea as to how this works, you can check out the pages/index.js file, our homepage, which mostly consists of our form and some handler functions that will allow us to read the selected image file and render it in the app.

Step 1: Creating a serverless API endpoint in Next.js

We’ll get started by setting up our endpoint, which is where we’ll send our image, and then upload it to Cloudinary.

To do this, first create a new file called upload.js inside of pages/api.

Inside of pages/api/upload.js add:

export default async function handler(req, res) {

res.status(200).json({

sucess: true

});

}Here we’re defining and exporting a default asynchronous function, where all of our function logic will live. Anytime we hit our endpoint, this function will run.

We can see it working by opening up our browser and visiting http://localhost:3000/api/upload.

Now we’ll be POSTing data to this endpoint, so we won’t be able to make this work by just visiting the URL in the browser, but we’ll use that same URL from within our app.

To do that, let’s create a POST request to our API endpoint any time we click the upload button in the app.

Inside of pages/index.js, you should see a handleOnSubmit function, where it’s associated to our form using the onSubmit handler.

Anytime someone clicks Upload, it submits that form, where we intercept it with our function.

Now inside our function, let’s create a new request to our API.

Inside handleOnSubmit add:

const data = await fetch('/api/upload', {

method: 'POST',

body: JSON.stringify({

test: true

})

}).then(r => r.json());

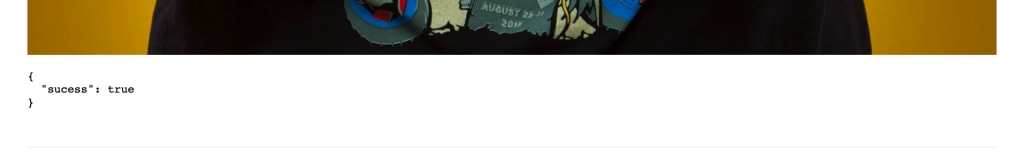

setUploadData(data);We’re using the browser’s fetch API to POST a request to our /api/upload endpoint where we’re simply sending a test object.

Once our request completes, we can see what that response looks like by running our setUploadData function, which is tied to an instance of useState that simply dumps the data into the page.

Again, we can see this working by selecting an image and clicking Upload, where it’s immediately placed with our same "succes": true as we saw when we visited our endpoint in the browser!

To make sure we can actually see the test data we’re posting, we can parse our request’s body in our function.

Inside pages/api/upload.js let’s update our function to:

export default async function handler(req, res) {

const data = JSON.parse(req.body);

res.status(200).json(data);

}If we try to click Upload in the browser again, we should see our test object instead of the success object, which is exactly what we want!

But now that we have our basic request working, in the next step we’ll learn how to configure Cloudinary so that we can upload images to our account.

Step 2: Installing and configuring the Cloudinary SDK

To install the Cloudinary SDK, we’ll use npm.

Inside of your terminal, run:

yarn add cloudinary

# or

npm install cloudinaryOnce complete, we want to now import our package into our serverless function.

At the top of pages/api/upload.js add:

const cloudinary = require('cloudinary').v2;We’re particularly importing version 2 of the Cloudinary SDK by adding the .v2 at the end of our require statement.

Next, we need to configure the SDK with our Cloudinary account. To do that we’ll need our:

- Cloud Name

- API Key

- API Secret

Under the Cloudinary import, add:

cloudinary.config({

cloud_name: process.env.CLOUDINARY_CLOUD_NAME,

api_key: process.env.CLOUDINARY_API_KEY,

api_secret: process.env.CLOUDINARY_API_SECRET

});We don’t want to explicitly define those values right in the code, as they’re sensitive, so we’ll use environment variables, which allow us to securely inject them into our serverless function.

Tip: Your Cloud Name is typically publicly shown in your web app, so if you prefer, you can define your Cloud Name right in the config

To define those variables, create a new file called .env.local at the root of your project and add:

CLOUDINARY_CLOUD_NAME="[Your Cloud Name]"

CLOUDINARY_API_KEY="[Your API Key]"

CLOUDINARY_API_SECRET="[Your API Secret]"Next, let’s find those values.

All three of these can be found right inside of your main Cloudinary dashboard.

Once you log in, near the top under Account Details, you should see all three, where once you hover over, you should see a little copy button to grab the value and paste it into your .env.local file.

Note: You should use your own Cloud Name as you need your own unique API Key and Secret to follow along.

With the Cloudinary SDK configured, we’re ready for the next step, where we’ll upload our images!

Step 3: Uploading images to Cloudinary in a serverless function with the Cloudinary SDK

To start off, we need to post our image data to our API endpoint so that we can take that image and send it up to Cloudinary.

Let’s first look in our app to see what’s currently going on.

Inside pages/index.js, any time a file is selected we run the handleOnChange function.

Once that happens, we run the following code:

function handleOnChange(changeEvent) {

const reader = new FileReader();

reader.onload = function(onLoadEvent) {

setImageSrc(onLoadEvent.target.result);

setUploadData(undefined);

}

reader.readAsDataURL(changeEvent.target.files[0]);

}Note: if you’re following along, this code is already in your app, but it’s helpful to known what’s happening!

We’re using the browser’s FileReader API where we pass our selected file to the readAsDataURL method which will give us a Base64 encoded string that represents the data of our image file.

Finally, once that happens, the onload event triggers where we update our app’s state to that Base64 string and render it to the page.

Now the thing we care about here is that we’re obtaining the Base64 string version of our image, which is perfect for sending along in a request as part of a data object.

We can use the same imageSrc which is being used to show our image on the page to send up to our API endpoint!

So inside of the handleOnSubmit function of pages/index.js, let’s update our body to:

body: JSON.stringify({

image: imageSrc

})Once our API endpoint receives that data, we’re going to send it right along to Cloudinary, which accepts that format of image data.

Inside of pages/api/upload.js let’s update the contents of our function to:

const { image } = JSON.parse(req.body);

const results = await cloudinary.uploader.upload(image);

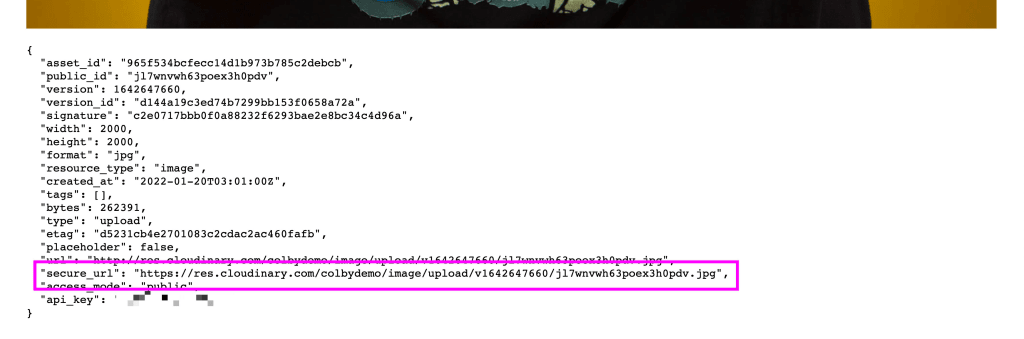

res.status(200).json(results);But now if we try to upload an image again, we’ll see that once the requests finishes, we now see an object including all of the details of our new Cloudinary upload!

Tip: if you’re seeing an error, try wrapping it with a try/catch to see what’s happening!

Next we’ll learn how to set up autotagging and configure our upload to use it.

Step 4: Setting up autotagging in Cloudinary with Google Vision AI

Before we add any code to configure out auto tagging, we need to first enable Google Vision inside of our Cloudinary account.

Google Auto Tagging is an Add-on in Cloudinary which serves as a separate service with separate pricing. Luckily we get 50 categorizations free, which is handy for trying this out and simple usage.

Inside of Cloudinary, navigate to the Add-ons page and select Google Auto Tagging.

The page will drop down to show the details of this add-on, including pricing and a simple example of code usage.

Under the pricing, select the Free plan:

Then select Agree, where you should then see a success message that you were subscribed to the free plan.

That’s all we need to do inside of Cloudinary, so let’s head to our code.

Taking the code examples we saw in the Cloudinary add-on section and updating them to node, we can now add a new options object to our Cloudinary SDK upload function that enables Google Auto Tagging.

Inside of pages/api/upload.js, change the upload line to:

const results = await cloudinary.uploader.upload(image, {

categorization: 'google_tagging',

auto_tagging: .6

});Here we’re stating that we want to use the Google Auto Tagging add-on for categorization and we want to tag anything where the confidence level is above 0.6 in a range from 0 to 1.

Now let’s try to upload an image again.

Once we get our results back, we’ll notice our data is much bigger than it was before. It includes our uploaded image’s tags that were automatically created for us by Google Auto Tagging.

In my example, I uploaded this picture of a mountain with stars in the sky from Unsplash.

Once I got back my results, I was able to see that Google Vision was pretty confident my image had a sky and a Mountain!

We can even navigate to our newly uploaded image inside of our Cloudinary account where we can see all of the tags that were applied.

By simply flipping on Google Auto Tagging and enabling it in our code, we were easily able to add useful labels and tags to our image!

Next we’ll look at a simple use case for how we can use inside of our apps.

Step 5: Browsing Cloudinary images by tag in a Next.js app

In a previous tutorial, I walked through How to List & Display Cloudinary Image Resources in a Gallery with Next.js & React.

We’ll do something pretty similar here, where we’ll fetch our image resources, but we’ll first fetch all of our tags and base our resources request based off of a selected tag.

Note: we’re going to move a bit more quickly through this step, so if you want a more detailed walkthrough of similar functionality, be sure to check out that previous tutorial

To start, we want to grab all of our tags.

We have a few options for how we do this, where we could grab our data inside one of the Next.js data fetching methods or we can continue with the API endpoint pattern we’re already using and create endpoints using our credentials and the SDK.

We’re going to go with creating new endpoints, so to start, let’s create an endpoint for our tags.

Create a new file tags.js under pages/api and add:

const cloudinary = require('cloudinary').v2;

cloudinary.config({

cloud_name: process.env.CLOUDINARY_CLOUD_NAME,

api_key: process.env.CLOUDINARY_API_KEY,

api_secret: process.env.CLOUDINARY_API_SECRET

});

export default async function handler(req, res) {

const tags = await cloudinary.api.tags({

max_results: 50

});

res.status(200).json(tags)

}We’re configuring our Cloudinary SDK just like in our previous endpoint where we’re now using the tags method to grab 50 of the tags in our Cloudinary account.

To grab these tags, we’re going to use a mix of React state to store the data and useEffect to make the request for the tags.

Inside of pages/index.js first import the useEffect hook with:

import { useState, useEffect } from 'react';Then at the top of the Home component function add:

const [tags, setTags] = useState();

useEffect(() => {

(async function run() {

const data = await fetch('/api/tags').then(r => r.json());

setTags(data.tags);

})()

}, []);Finally let’s render that to our page.

Under our h1 title (or wherever you’d like) add:

{Array.isArray(tags) && (

<ul style={{

display: 'flex',

flexWrap: 'wrap',

justifyContent: 'center',

listStyle: 'none',

padding: 0,

margin: 0

}}>

{ tags.map(tag => {

return (

<li key={tag} style={{ margin: '.5em' }}>

<button>{ tag }</button>

</li>

)

})}

</ul>

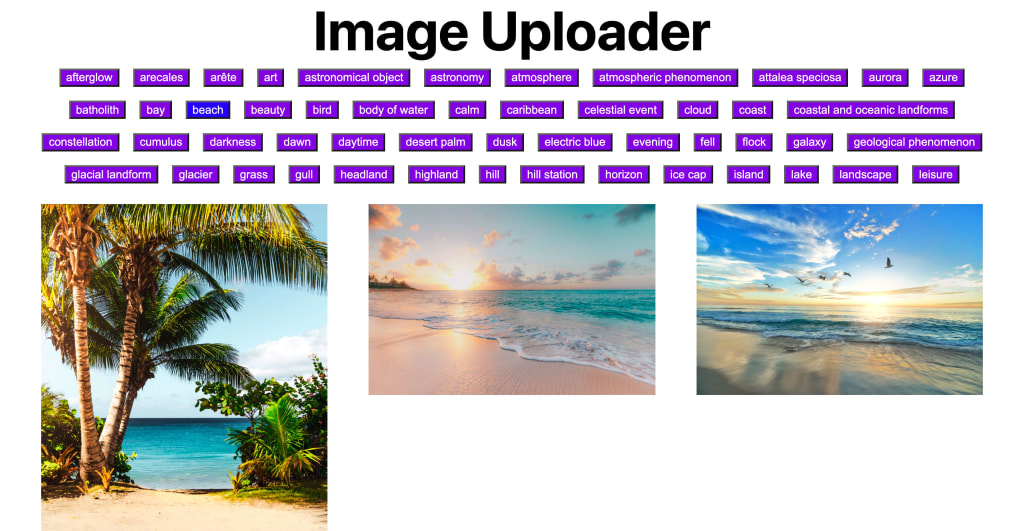

)}We’re mapping through all of our tags if they’re available, setting some styles to make it readable and usable, and creating a button for each tag.

While it could use some styling love, we should see something along of the following if you have any tags in your account (which you should from the last step).

Next, we want to allow someone to select a tag and make it “active” where we’ll then request all of the images associated with that tag.

We’ll do something similar to how we requested the tags in the first place, where we’ll store some of this data in state, but we’ll base our request off of the active tag value, so any time it changes, our useEffect hook will fire again.

First let’s create our API endpoint.

Create a file images.js inside of pages/api and inside add:

const cloudinary = require('cloudinary').v2;

cloudinary.config({

cloud_name: process.env.CLOUDINARY_CLOUD_NAME,

api_key: process.env.CLOUDINARY_API_KEY,

api_secret: process.env.CLOUDINARY_API_SECRET

});

export default async function handler(req, res) {

const { tag } = JSON.parse(req.body);

const resources = await cloudinary.api.resources_by_tag(tag);

res.status(200).json(resources)

}We’re using the resources_by_tag method this time and passing in a tag that we got from the body of the request.

Now let’s actually POST to it!

Under the state and effect for tags inside of pages/index.js, add:

const [activeTag, setActiveTag] = useState();

const [images, setImages] = useState();

useEffect(() => {

(async function run() {

if ( !activeTag ) return;

const data = await fetch('/api/images', {

method: 'POST',

body: JSON.stringify({

tag: activeTag

})

}).then(r => r.json());

setImages(data.resources);

})()

}, [activeTag]);This should look pretty similar to our tags code, but we’re using two instances of state to store the tag we’re actively looking up.

Whenever that active tag changes, we’re POSTing that tag to our images endpoint.

To make that work, we need to wire up our tag buttons.

Update each tag button to include an onClick handler:

<button onClick={() => setActiveTag(tag)} style={{

color: 'white',

backgroundColor: tag === activeTag ? 'blue' : 'blueviolet'

}}>

{ tag }

</button>We’re also adding a check if the tag is active to change the color a bit to make it more clear.

Then finally, let’s display the images.

Under our tags add:

{Array.isArray(images) && (

<ul style={{

display: 'grid',

gridGap: '1em',

gridTemplateColumns: 'repeat(auto-fit, minmax(150px, 1fr))',

listStyle: 'none',

padding: 0,

margin: 0

}}>

{ images.map(image => {

return (

<li key={image.asset_id} style={{ margin: '1em' }}>

<img src={image.secure_url} width={image.width} height={image.height} alt="" />

</li>

)

})}

</ul>

)}Similar to our tags, we’re looping through each image and displaying it on the page.

Now once we open the app, we should see our tags load like before, but now let’s select a tag.

We should see any images that are associated with that tag!

If you only uploaded one image with tags, like the one in this walkthrough, you may only see one image.

Try to upload a few more images with the upload tool.

Once you do, you’ll see that when you reload the page, you’ll likely have more tags, but now if you select a tag with multiple images, you’ll see them all on the page!

What else can we do?

Use search to allow people to manually look up images

Instead of using a huge list of tags, let people use text-based search.

Add a search input and use a tool like fuse.js to filter through the available tags.

Better yet add autocomplete with the ability to navigate the results with your keyboard. See how with How to Use Browser Event Listeners in React for Search and Autocomplete.

Add transformations to your media

Once your media is generating through Cloudinary, you have the ability to easily transform your media from simple things like resizing to complex things like overlays.

For instance, if you have a photo gallery of people, similar to the People & Pets feature of Google Photos, you can use Cloudinary AI to find the faces in the image and crop intelligently to that area.

How to Create Thumbnail Images Using Face Detection with Cloudinary